ResNet consists of multi shallow network blocks (residual blocks) the purpose of ResNet is to keep model from degradation if NN depth increases advantage:

- deeper networks

- solve model degradation problem

- converge faster

principle

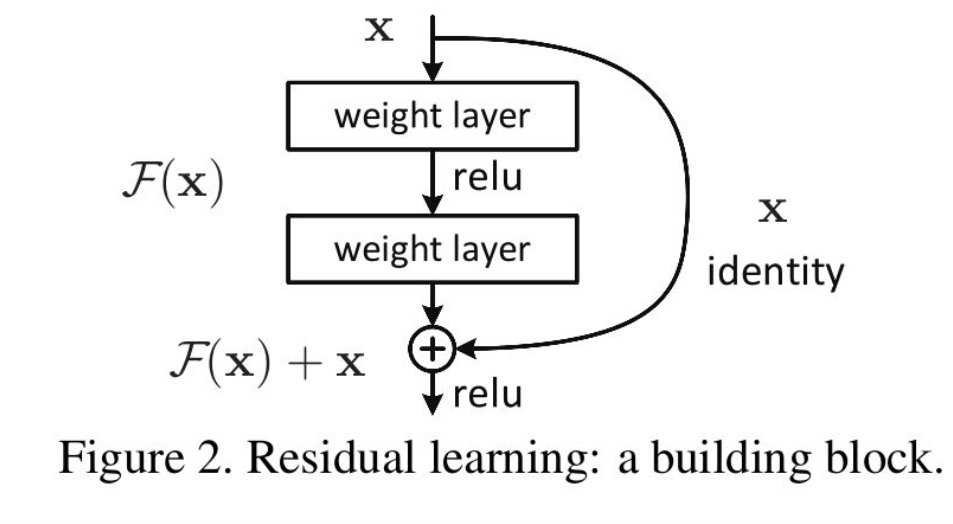

H(x)=F(x)+x means that adding an identity mapping item x to the previous target function F(x). when F(x)=0,identity mapping exists, so right now is to find a mapping relationship F(x) between residuals of (ouput-input) and input rather than mapping relationship H(x) between input and output

shortcut connection is done before activation.

To keep dimension matches we use H(x)=F(x,{Wi})+Ws*x

in one building block there should be at least two weight layers, usually 2-3

structure

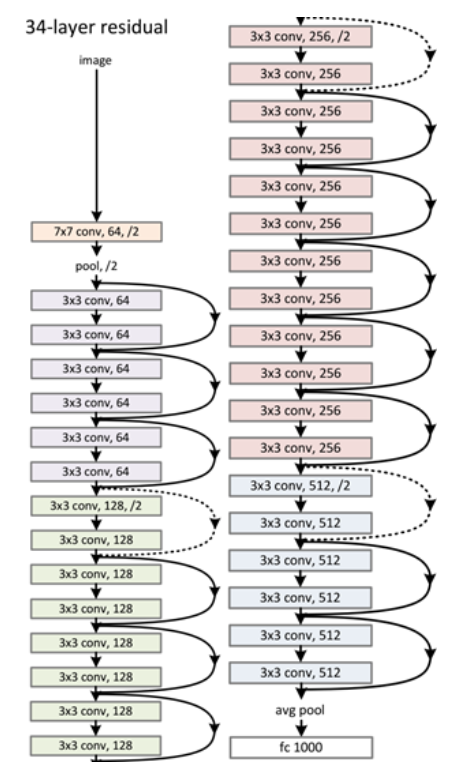

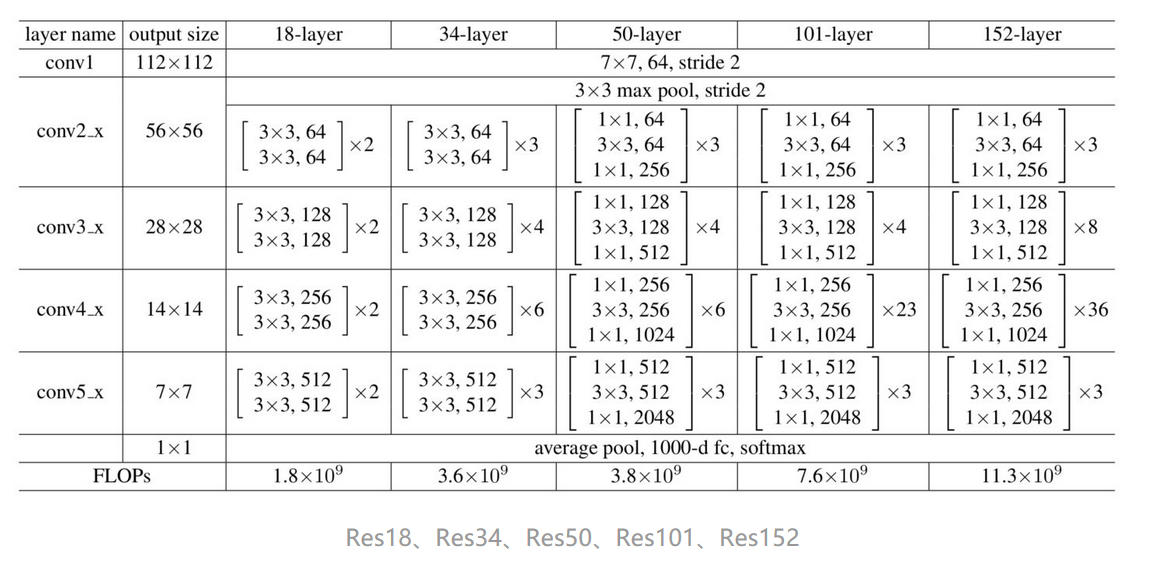

input will be first processed by a [7x7] conv layer(stride=2), then max pooled with [3x3] filter (stride=2). Afterwards is stacking of Residual blocks(normal build blocks for layer < 50; bottleneck blocks for layer >50). At the end is global average pooling and softmax

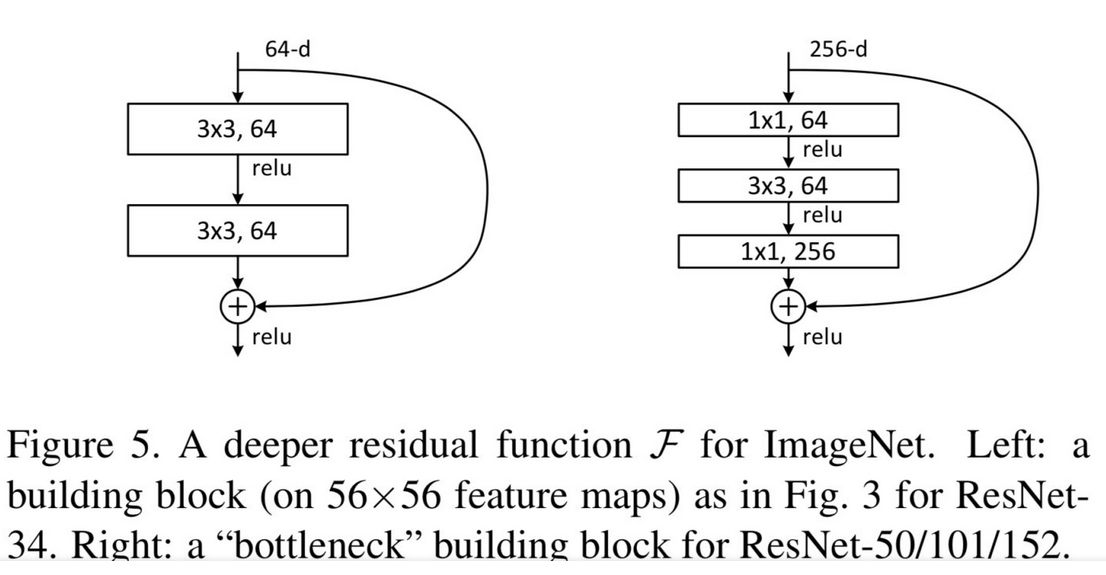

- building block vs bottleneck block

one residual block contains multi building blocks or bottleneck blocks