To avoid that normalization dependes on batch size will lead to bad results when batch size on test and training is different, filter Response Normalization is introduced

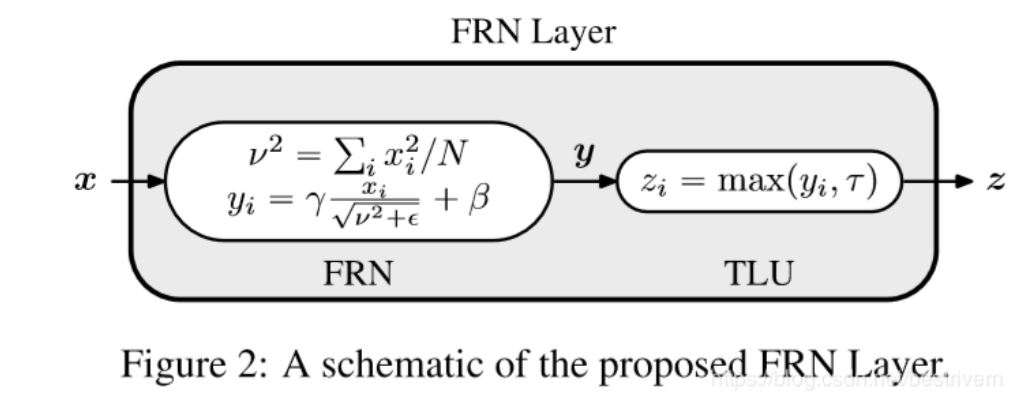

structure

Filter Response Normalization layer consists of FRN(filter response normalization) and TLU(Threadhold linear Unit)

the shape of input x is [B, W, H, C], N=BxW (pixel number)

the normalization is done on axis=[1,2] (HxW), so every channel is normalized seperately.

no variance on batch dimension is included in calculation, which means that normalization is done on batch size=1, so the normalization is not dependent on batch size

the shape of input x is [B, W, H, C], N=BxW (pixel number)

the normalization is done on axis=[1,2] (HxW), so every channel is normalized seperately.

no variance on batch dimension is included in calculation, which means that normalization is done on batch size=1, so the normalization is not dependent on batch size

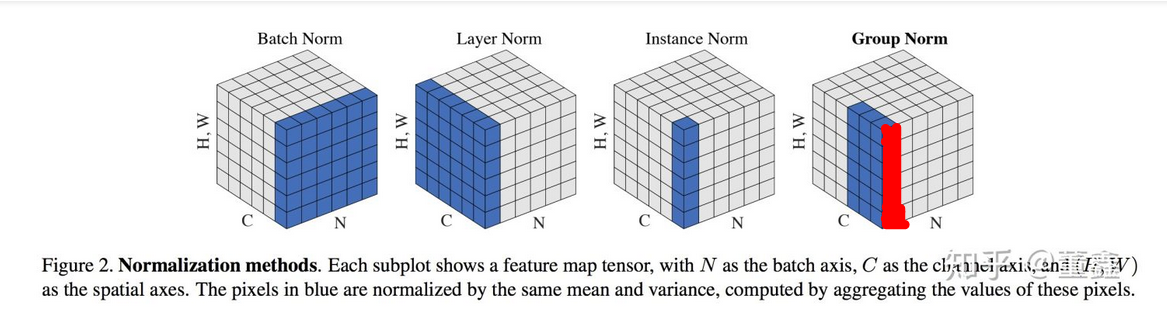

difference to other normalization

the differnce between FRN and other normalization is shown above:

the differnce between FRN and other normalization is shown above:

- FRN is done on the red marked cubics

- batch norm is done on all cubics in all batches

- layer norm is done on all cubics in the all channel