Hbase is a noSQL database to store huge amount of data and at the same time offer random access to data. It is based on Hadoop system to provide read/write access(all files are saved in hdfs) HBase is column-oriented (which is good for analyse same attribute on large amounts of data) database that composed in such sequence: key/value(with timestamp)->column->column family->row->table

architecture & model

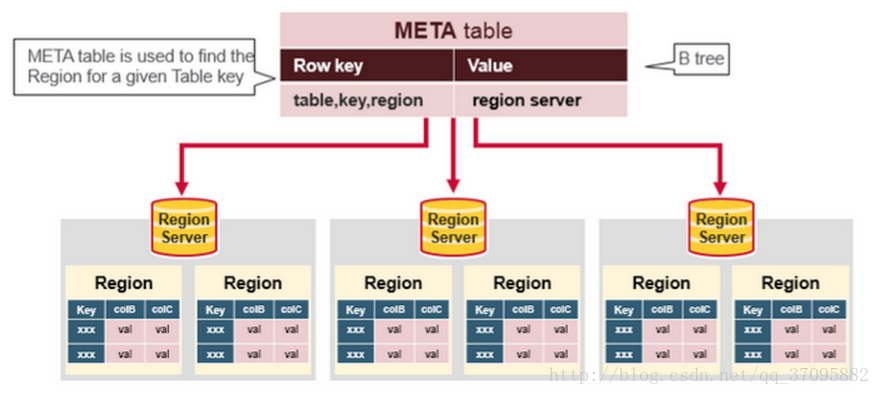

- rows in table are ordered with row key dictionary

- table is divided into multi-regions in row direction

- region splits into more regions when data grows, different regions are distributed into different region servers

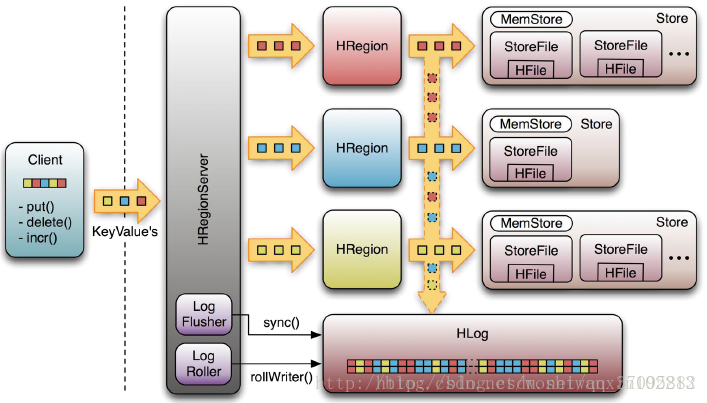

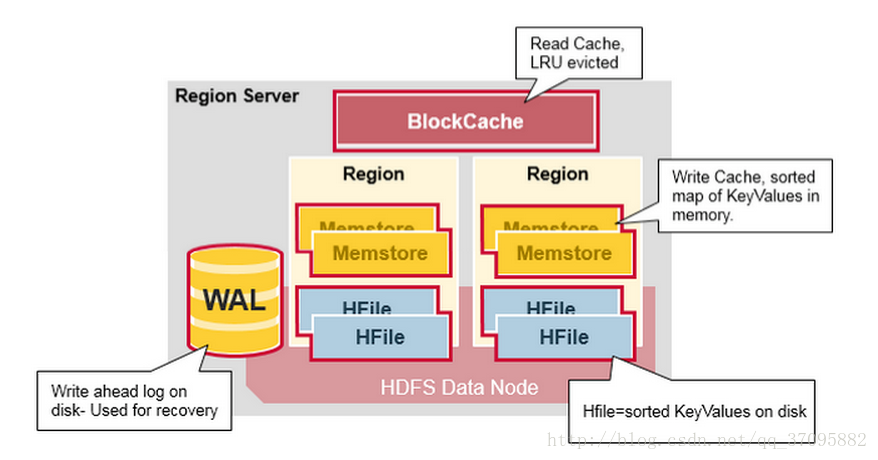

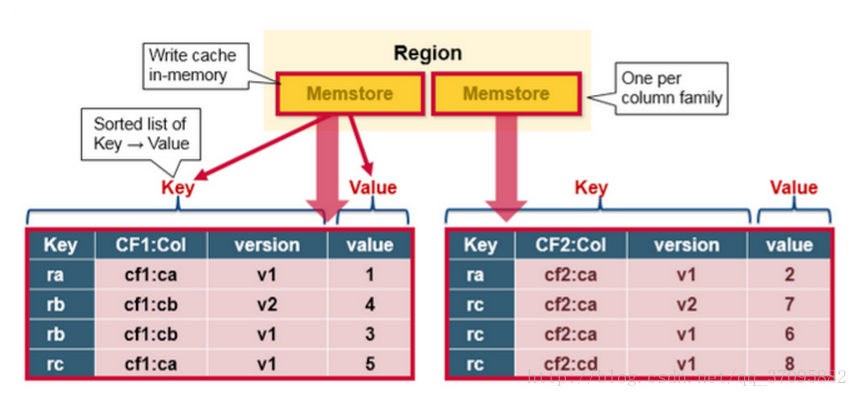

- region is composed of one or more stores, every store stores one column family, store is composed of one memStore and zero or more StoreFile, memStore is stored in RAM and StoreFile is stored in hdfs

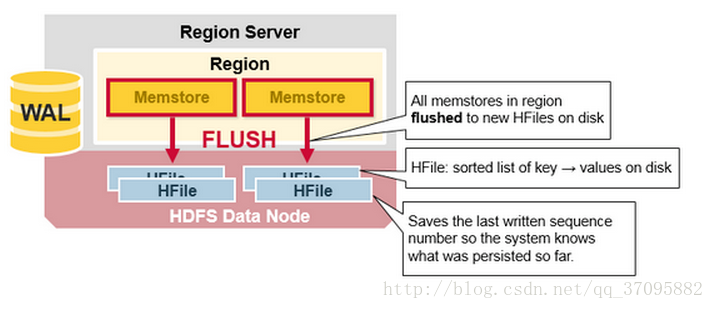

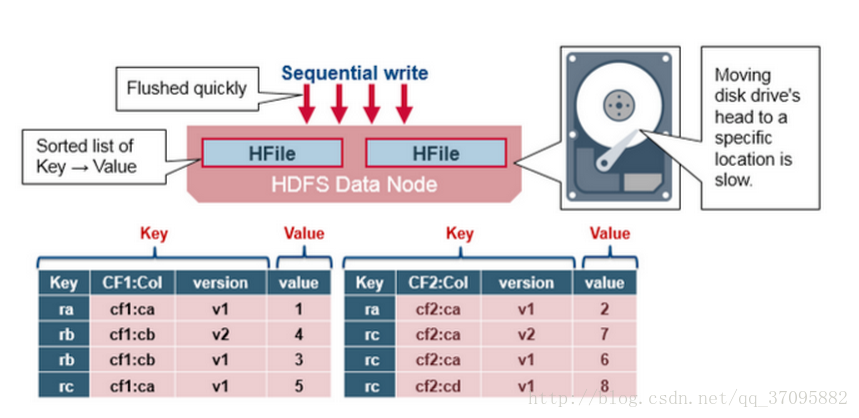

- memStore is an In Memory Sorted Buffer. After WAL is done, data is cached in memStore and flusched to low-level hdfs. Every Column Family in HRegion has its own memStore (memStore is an instance of Column Family). Put/Delete request is first written in memStore, only when memStore is full, the operation will be flushed to HFile

- StoreFile(HFile) store Hbase data (key/vale in each cell), data is ordered with RowKey, Column Family, Column or timestamp. since Flush process is in sequence (no meed to move disk drive’s header), high write performance is possible

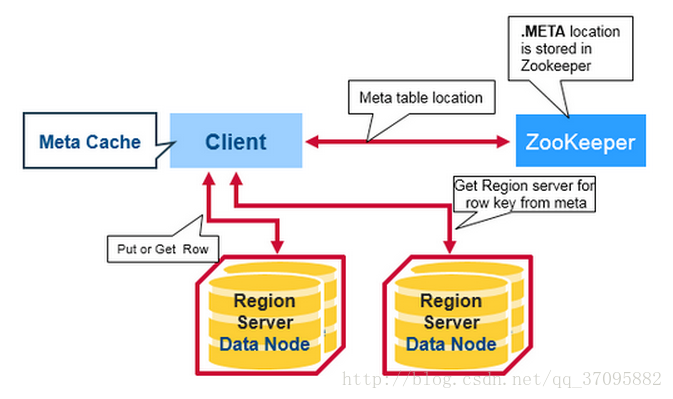

client

API for Hbase access, holds cache to accelerate the access

HMaster

manage HRegionServer to balance load, manage and distribute region, DDL(data definition language, create/delete/change table)operation, manage namespace and table metadata multi-HMaster can be started but only one is kept active, non-active HMaster communicates with active HMaster to get backup updated

HRegionServer

manage HRegion, read/write table data from hdfs HRegionServer can store up to 1000 HRegions low-level table is stored in hdfs, HRegionServer and DataNode should locate on same server (data localization)

ZooKeeper

coordinate system to store Hbase cluster metadata and state data, failover between master/slave node possible(Hmaster and NameNode support hot backup)

HRegion

HRegion notes down its StartKey and EndKey. Since RowKey is in order, HMaster can fast locate HRegion according to request. HRegion is distributed in corresponding HRegionServer by HMaster, HRegionServer is reponsable for starting and managing HRegion

application scene

- big data storage, High concurrent operations

- random read/write

- simple operation of read/write

process (Hbase>0.96)

- read process

- get HRegionServer location(hbase:meta) from ZooKeeper, this location will be cached

- get HRegion location from HRegionServer according to user requested RowKey, this location will be cached

- access Row data through HRegionServer

META table(hbase:meta)

- write process

- get HRegionServer location from hbase:meta if a put request from client is trigered

- client send put request to HRegionServer

- in HRegionServer put operation will be written into WAL, then according to TableName and RowKey in Put operation find HRegion, find corresponding HStore by Column Family, put operation is written into memStore in HStore

- update back to client

Implementation

shell

#create table

hbase> create table_name column_name

#add cell

hbase> put table_name row_name column_name value

#get

hbase> table_name row_name

#count

hbase> count table_name

#delete cell

hbase> delete table_name row_name column_name

#delete table

hbase> disable table_name

hbase> drop table_name ### java

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.HColumnDescriptor;

import org.apache.hadoop.hbase.HTableDescriptor;

import org.apache.hadoop.hbase.KeyValue;

import org.apache.hadoop.hbase.client.HBaseAdmin;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.io.BatchUpdate;

@SuppressWarnings(“deprecation”)

public class HBaseTestCase {

static HBaseConfiguration cfg = null;

static {

Configuration HBASE_CONFIG = new Configuration();

HBASE_CONFIG.set("hbase.zookeeper.quorum", "192.168.50.216");

HBASE_CONFIG.set("hbase.zookeeper.property.clientPort", "2181");

cfg = new HBaseConfiguration(HBASE_CONFIG);

}

/**

* create table

*/

public static void creatTable(String tablename) throws Exception {

HBaseAdmin admin = new HBaseAdmin(cfg);

if (admin.tableExists(tablename)) {

System.out.println("table Exists!!!");

}

else{

HTableDescriptor tableDesc = new HTableDescriptor(tablename);

tableDesc.addFamily(new HColumnDescriptor("name:"));

admin.createTable(tableDesc);

System.out.println("create table ok .");

}

}

/**

* add

*/

public static void addData (String tablename) throws Exception{

HTable table = new HTable(cfg, tablename);

BatchUpdate update = new BatchUpdate("Huangyi");

update.put("name:java", "http://www.javabloger.com".getBytes());

table.commit(update);

System.out.println("add data ok .");

}

/**

* show all

*/

public static void getAllData (String tablename) throws Exception{

HTable table = new HTable(cfg, tablename);

Scan s = new Scan();

ResultScanner ss = table.getScanner(s);

for(Result r:ss){

for(KeyValue kv:r.raw()){

System.out.print(new String(kv.getColumn()));

System.out.println(new String(kv.getValue() ));

}

}

}

public static void main (String [] agrs) {

try {

String tablename="tablename";

HBaseTestCase.creatTable(tablename);

HBaseTestCase.addData(tablename);

HBaseTestCase.getAllData(tablename);

}

catch (Exception e) {

e.printStackTrace();

}

}

}