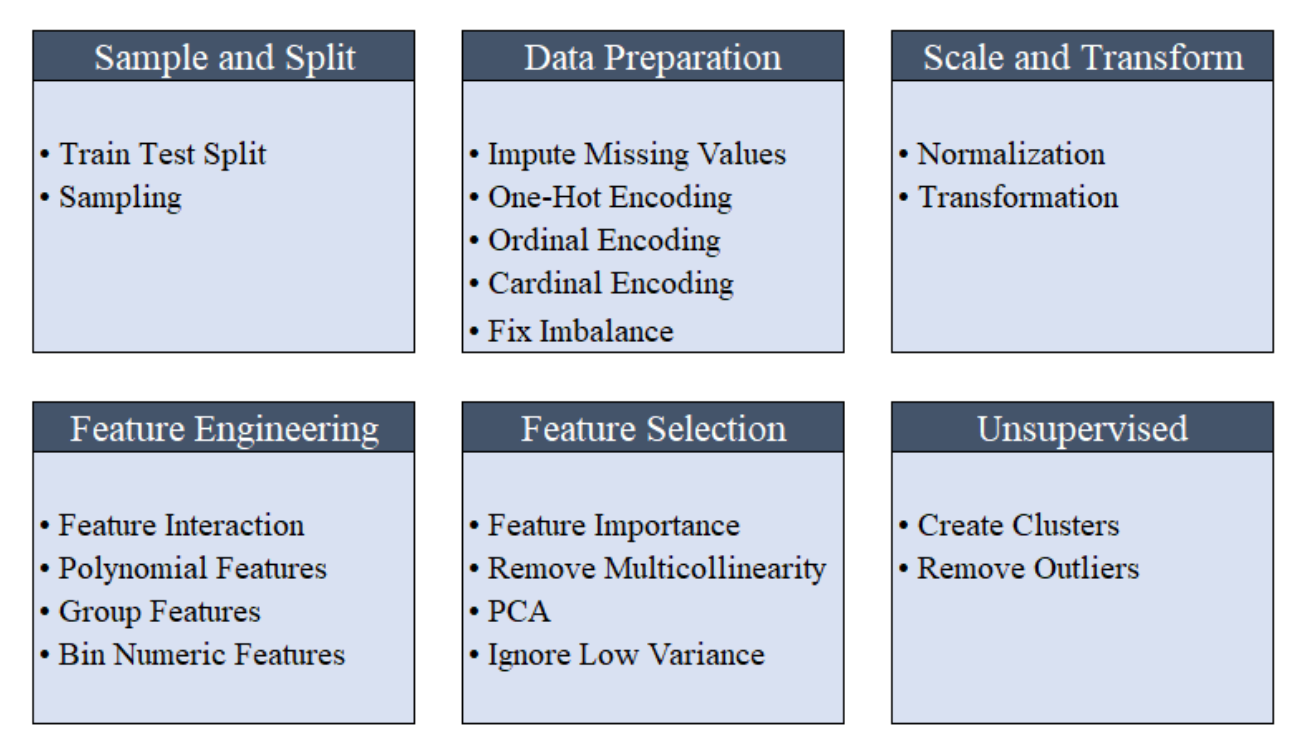

it is a low-code machine learning library in Python that automates machine learning workflow

Installation and usage

# install pycaret

pip install pycaret==2.0

# create notebook kernel linked with the conda environment

python -m ipykernel install --user --name yourenvname --display-name "display-name"

Initialization

# Import module

from pycaret.classification import *

# Initialize setup (when using Notebook environment)

clf1 = setup(data, target = 'target-variable')

# Initialize setup (outside of Notebook environment)

clf1 = setup(data, target = 'target-variable', html = False)

# Initialize setup (When using remote execution such as Kaggle / GitHub actions / CI-CD pipelines)

clf1 = setup(data, target = 'target-variable', html = False, silent = True)

compare model

The evaluation metrics used are:

- Classification: Accuracy, AUC, Recall, Precision, F1, Kappa, MCC

-

Regression: MAE, MSE, RMSE, R2, RMSLE, MAPE

#import classification module from pycaret.classification import * #init setup clf1 = setup(data, target = 'name-of-target') #return best model best = compare_models() #return best model based on Recall best = compare_models(sort = 'Recall') #default is 'Accuracy' #compare specific models best_specific = compare_models(whitelist = ['dt','rf','xgboost']) #blacklist certain models best_specific = compare_models(blacklist = ['catboost','svm']) #return top 3 models based on Accuracy top3 = compare_models(n_select = 3)

Create Model

# import classification module

from pycaret.classification import *

# init setup

clf1 = setup(data, target = 'name-of-target')

# train logistic regression model

lr = create_model('lr') #lr is the id of the model

# check the model library to see all models

models()

# train rf model using 5 fold CV

rf = create_model('rf', fold = 5)

# train svm model without CV

svm = create_model('svm', cross_validation = False)

# train xgboost model with max_depth = 10

xgboost = create_model('xgboost', max_depth = 10)

# train xgboost model on gpu

xgboost_gpu = create_model('xgboost', tree_method = 'gpu_hist', gpu_id = 0) #0 is gpu-id

# train multiple lightgbm models with n learning_rate

lgbms = [create_model('lightgbm', learning_rate = i) for i in np.arange(0.1,1,0.1)]

# train custom model

from gplearn.genetic import SymbolicClassifier

symclf = SymbolicClassifier(generation = 50)

sc = create_model(symclf)

Tune Model

tunes the hyperparameter of the model passed as an estimator

# import classification module

from pycaret.classification import *

# init setup

clf1 = setup(data, target = 'name-of-target')

# train a decision tree model

dt = create_model('dt')

# tune hyperparameters of decision tree

tuned_dt = tune_model(dt)

# tune hyperparameters with increased n_iter

tuned_dt = tune_model(dt, n_iter = 50)

# tune hyperparameters to optimize AUC

tuned_dt = tune_model(dt, optimize = 'AUC') #default is 'Accuracy'

# tune hyperparameters with custom_grid

params = {"max_depth": np.random.randint(1, (len(data.columns)*.85),20),

"max_features": np.random.randint(1, len(data.columns),20),

"min_samples_leaf": [2,3,4,5,6],

"criterion": ["gini", "entropy"]

}

tuned_dt_custom = tune_model(dt, custom_grid = params)

# tune multiple models dynamically

top3 = compare_models(n_select = 3)

tuned_top3 = [tune_model(i) for i in top3]

Ensemble Model

ensembel learner: ensemble_model, blend_models and stack_models and so on

# import classification module

from pycaret.classification import *

# init setup

clf1 = setup(data, target = 'name-of-target')

# train a decision tree model

dt = create_model('dt')

# train a bagging classifier on dt

bagged_dt = ensemble_model(dt, method = 'Bagging')

# train a adaboost classifier on dt with 100 estimators

boosted_dt = ensemble_model(dt, method = 'Boosting', n_estimators = 100)

# train a votingclassifier on all models in library

blender = blend_models()

# train a voting classifier on specific models

dt = create_model('dt')

rf = create_model('rf')

adaboost = create_model('ada')

blender_specific = blend_models(estimator_list = [dt,rf,adaboost], method = 'soft')

# train a voting classifier dynamically

blender_top5 = blend_models(compare_models(n_select = 5))

# train a stacking classifier

stacker = stack_models(estimator_list = [dt,rf], meta_model = adaboost)

# stack multiple models dynamically

top7 = compare_models(n_select = 7)

stacker = stack_models(estimator_list = top7[1:], meta_model = top7[0])

Predict Model

evaluate the model on previously unseen data

# train a catboost model

catboost = create_model('catboost')

# predict on holdout set (when no data is passed)

pred_holdout = predict_model(catboost)

# predict on new dataset

new_data = pd.read_csv('new-data.csv')

pred_new = predict_model(catboost, data = new_data)

Plot Model

# import classification module

from pycaret.classification import *

# init setup

clf1 = setup(data, target = 'name-of-target')

# train adaboost model

adaboost = create_model('ada')

# AUC plot

plot_model(adaboost, plot = 'auc')

# Decision Boundary

plot_model(adaboost, plot = 'boundary')

# Precision Recall Curve

plot_model(adaboost, plot = 'pr')

# Validation Curve

plot_model(adaboost, plot = 'vc')

Model interpretation with SHAP

interpret_model(xgb) #xgb is the model variable

Model saving and loading

#Before saving the model, we need to finalize it

finalize_model(xgb)

save_model(xgb, ‘diabetes_xgboost’)

#reload model

model = load_model(‘diabetes_xgboost’)

Util functions

# select and finalize the best model in the active run

best_model = automl() #returns the best model based on CV score

# select and finalize the best model based on 'F1' on hold_out set

best_model_holdout = automl(optimize = 'F1', use_holdout = True)

# save model

save_model(model, 'c:/path-to-directory/model-name')

# load model

model = load_model('c:/path-to-directory/model-name')

# retrieve score grid as pandas df

dt = create_model('dt')

dt_results = pull() #this will store dt score grid as pandas df

# get global environment variable

X_train = get_config('X_train') #returns X_train dataset after preprocessing

seed = get_config('seed') returns seed from global environment

# set global environment variable

set_seed(seed, 999) #seed set to 999 in global environment of active run

# get experiment logs as csv file

logs = get_logs() #for active run by default

# get system logs for audit

system_logs = get_system_logs() #read logs.log file from active directory

Experiment Logging

PyCaret 2.0 embeds MLflow tracking component as a backend API and UI for logging parameters, code versions, metrics, and output files

# import classification module

from pycaret.classification import *

# init setup

clf1 = setup(data, target = 'name-of-target', log_experiment = True, experiment_name = 'exp-name-here')

# compare models

best = compare_models()

# start mlflow server on localhost:5000 (when using notebook)

!mlflow ui

example

# import libraries

import pandas as pd

import sys

# define command line parameters

data = sys.argv[1]

target = sys.argv[2]

# load data (replace this part with your own script)

from pycaret.datasets import get_data

input_data = get_data(data)

# init setup

from pycaret.classification import *

clf1 = setup(data = input_data, target = target, log_experiment = True)

# compare baseline models and select top5

top5 = compare_models(n_select = 5)

# tune top5 models

tuned_top5 = [tune_model(i) for i in top5]

# ensemble top5 tuned models

bagged_tuned_top5 = [ensemble_model(i, method = 'Bagging') for i in tuned_top5]

# blend top5 models

blender = blend_models(estimator_list = top5)

# stack top5 models

stacker = stack_models(estimator_list = top5[1:], meta_model = top5[0])

# select best model based on recall

best_model = automl(optimize = 'Recall')

# save model

save_model(best_model, 'c:/path-to-directory/final-model')