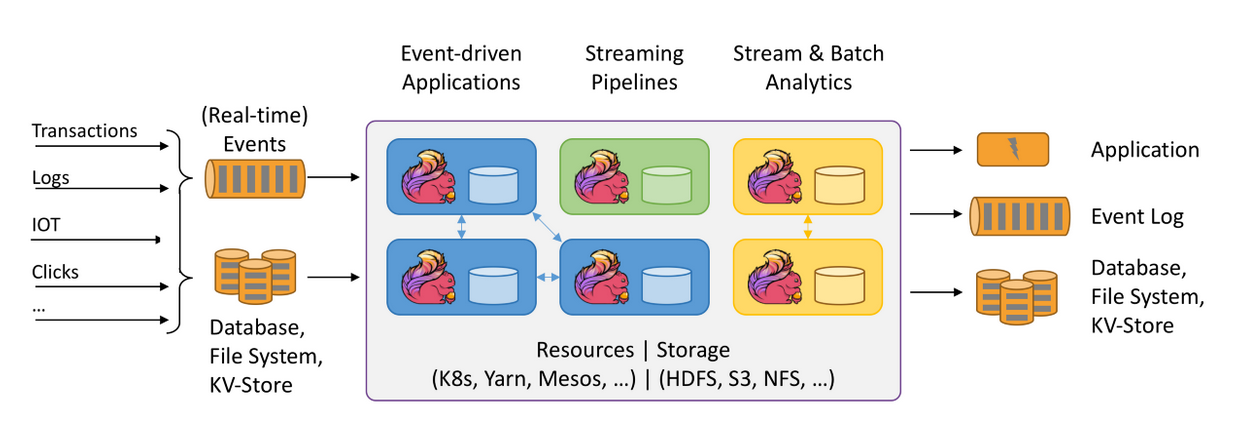

Flink is an open-source framework for stateful, large-scale, distributed, and fault-tolerant stream processing.

Apache Flink allows to ingest massive streaming data (up to several terabytes) from different sources and process it in a distributed fashion way across multiple nodes, before pushing the derived streams to other services or applications such as Apache Kafka, DBs, and Elastic search.

Flink enables real-time data analytics on streaming data and fits well for continuous Extract-transform-load (ETL) pipelines on streaming data and for event-driven applications as well.

Flink offers multiple operations on data streams or sets such as mapping, filtering, grouping, updating state, joining, defining windows, and aggregating.

Features:

Features:

- Robust Stateful Stream Processing: handle business logic that requires a contextual state while processing the data streams using its DataStream API at any scale

- Fault Tolerance: state recovery from faults based on a periodic and asynchronous checkpointing

- Exactly-Once Consistency Semantics: each event in the stream is delivered and processed exactly once in case of failures

- Scalability

- In-Memory Performance: perform all computations by accessing local, often in-memory, state yielding very low processing latencies

- seamless connectivity to data sources including Apache Kafka, Elasticsearch, Apache Cassandra, Kinesis

- Flexible deployment: can be deployed on various clusters environments (YARN, Apache Mesos, and Kubernetes)

- Complex Event Processing (CEP ) library to detect patterns (i.e., event sequences) in data streams

- Fluent APIs in Java and Scala

- Flink is a true streaming engine comparing to the micro-batch processing model of Spark Streaming

Data Abstraction

- DataStream

- DataSet

backend storage

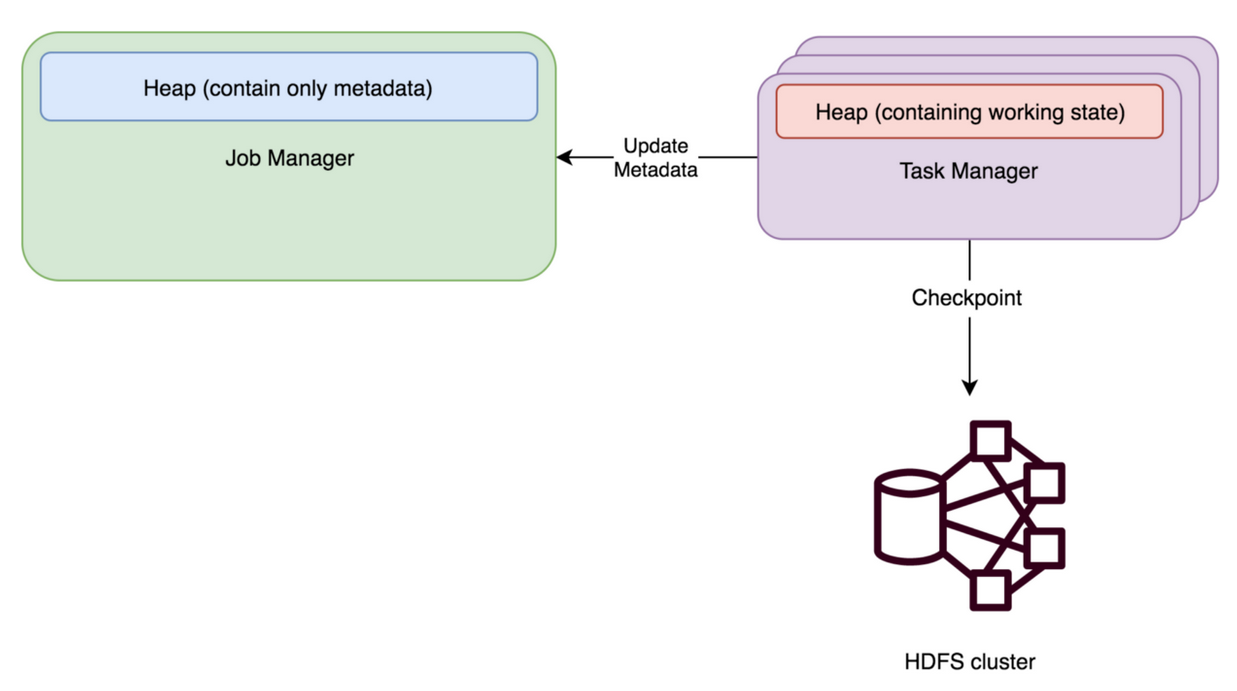

- Memory State Backend

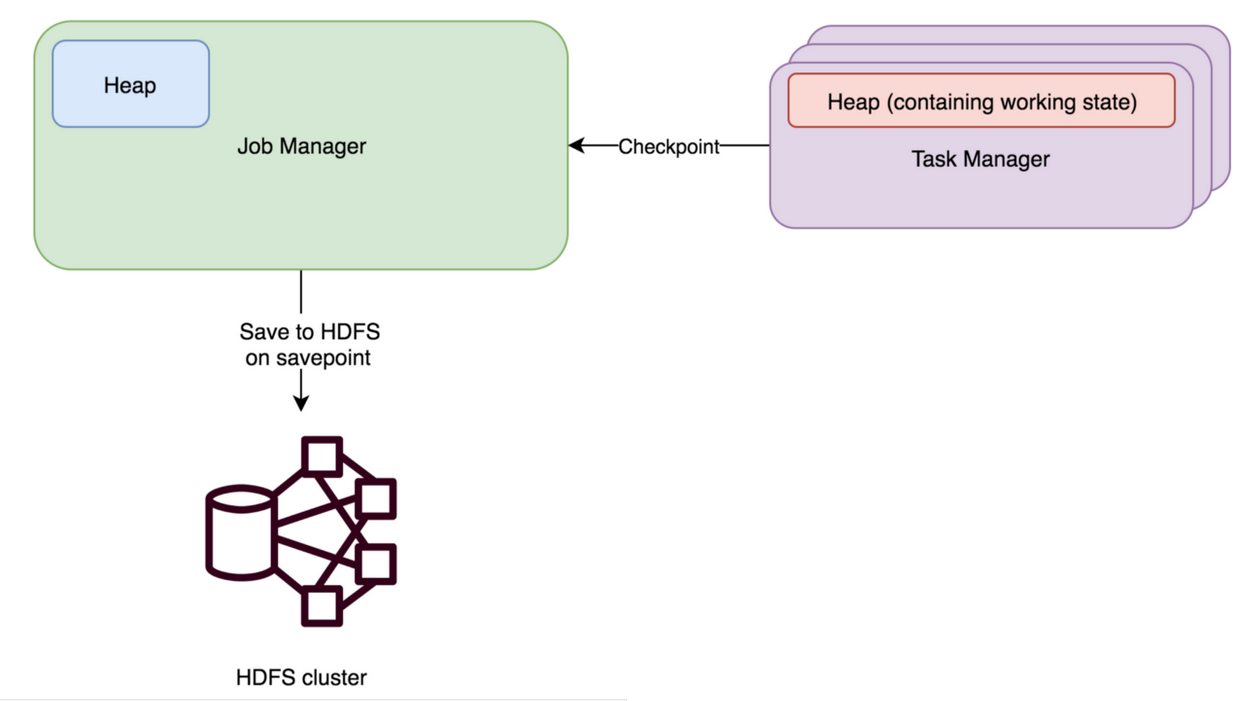

- File System Backend

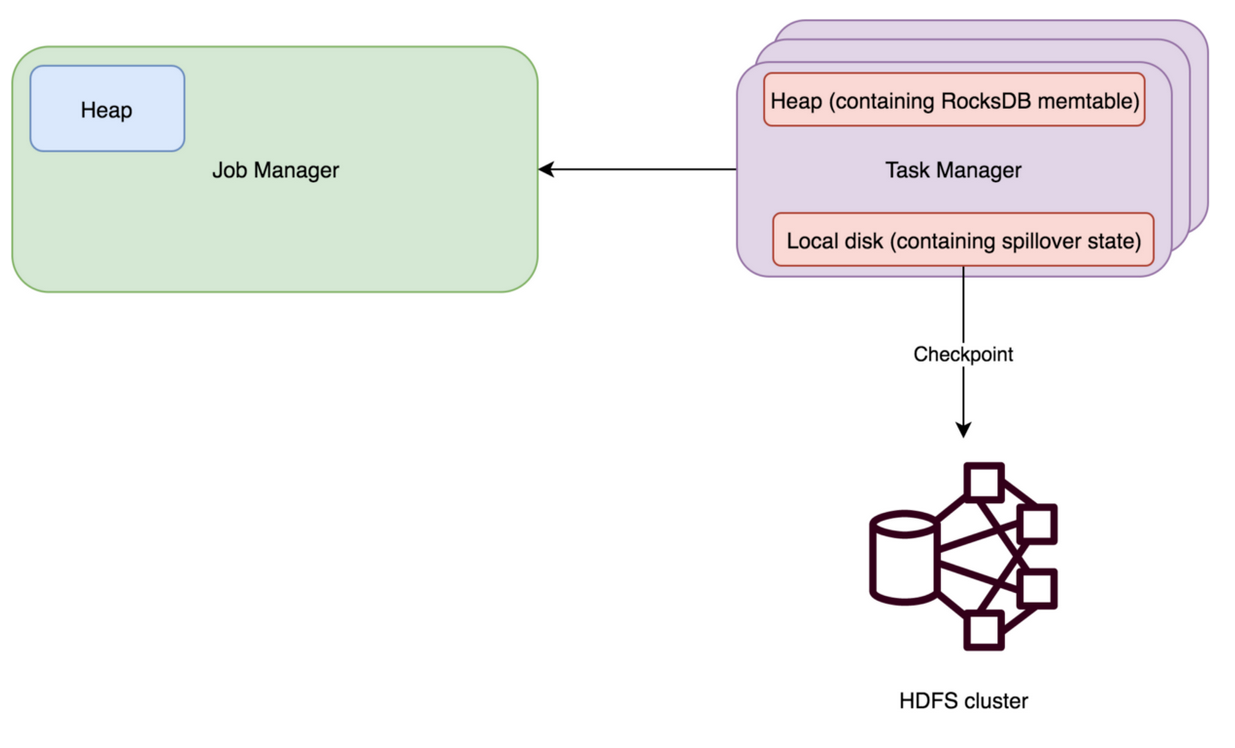

- RocksDB backend